OpenAI GPT-4 Image Input – It’s Finally Here

This feature article was contributed by Dan Huby, CTO at Montala, the company behind DAM platform ResourceSpace.

Earlier this year I wrote an article about GPT-4’s multimodal capability. In March, when I wrote the article, it hadn’t been publicly released yet – but it’s finally here. GPT-4 is the latest iteration of OpenAI’s advanced AI, and multimodal support means it’s capable of understanding images and not just text. In my article I explained why I felt this would be a game changer for DAM systems, not only by enabling much more accurate automatic categorisation of assets, but also potentially by allowing for creative content creation, designer inspiration and improved asset recommendations.

OpenAI have now released multimodal support, first via ChatGPT, and now via their API (so capable of being integrated into a DAM), albeit only as a limited preview allowing for the processing of 100 images a day.

It’s speculative, but the extended timeline for release could be attributed to the ethical, legal, and safety implications inherent in advanced image detection technology. Navigating this complex landscape presumably involves overcoming multiple obstacles and necessitates considerable fine-tuning of the model. A notable aspect of its current iteration is the deliberate design choice to avoid individual identification, a decision that undoubtedly plays a crucial role in upholding privacy and safety standards.

How Does it Perform?

In my earlier article, by way of explanation, I provided a sample image and simulated a number of scenarios (using GPT, but with my own textual description of the image as input). I thought it’d be interesting to run these examples again using the GPT-4 integration the team at Montala recently incorporated into ResourceSpace.

Image Descriptions

Firstly, we’ll focus on creating a basic description of the image. This type of description is a fundamental metadata field widely used by our customers. Traditionally, it’s filled in manually, at the time of upload, or beforehand via embedded metadata.

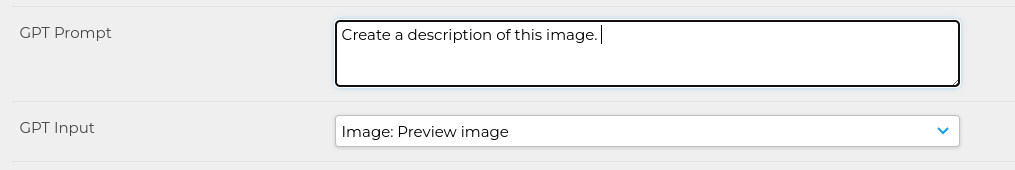

With the new integration, the image description field can now be automatically populated using GPT-4 once the image is uploaded. To achieve this, we simply configure a metadata field with a prompt instructing GPT-4 on our requirement. In this instance, the prompt is straightforward: “Create a description of this image”.

So how does it perform? After uploading the test image used in the original article, the metadata field is set as follows:

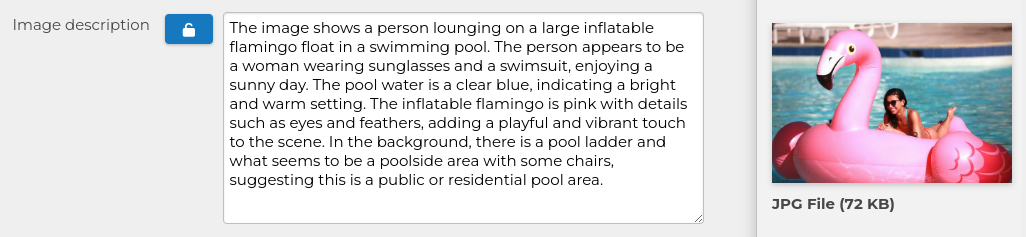

The field contents are as follows: “The image shows a person lounging on a large inflatable flamingo float in a swimming pool. The person appears to be a woman wearing sunglasses and a swimsuit, enjoying a sunny day. The pool water is a clear blue, indicating a bright and warm setting. The inflatable flamingo is pink with details such as eyes and feathers, adding a playful and vibrant touch to the scene. In the background, there is a pool ladder and what seems to be a poolside area with some chairs, suggesting this is a public or residential pool area.”

The AI’s ability to extract such an intricate level of detail from a single image is truly remarkable. It not only identified the nuances on the flamingo but also captured the ‘playful and vibrant’ essence imparted by its pink colour, demonstrating an almost human-like interpretation. However, it’s intriguing to note that the AI misinterpreted certain elements, perceiving ladders where there were none, likely mistaking the appearance of chairs for ladders. This instance highlights the AI’s sophisticated yet imperfect analytical capabilities.

My earlier simulated response was a little more reserved and less descriptive: “A joyful woman wearing a stylish swimming costume and sunglasses relaxes on a vibrant, oversized inflatable flamingo in a sunlit swimming pool. She exudes happiness as she basks in the warm sunshine, enjoying a leisurely summer day.”

The initial results are promising: this level of detailed description could be incredibly useful and not far from human entered content. The user is able to edit the field, so if the description isn’t quite right it can easily be changed. Having such high quality text as a starting point should be a huge time-saver.

Now, let’s turn our attention to the next critical aspect – categorisation.

Image Categorisation

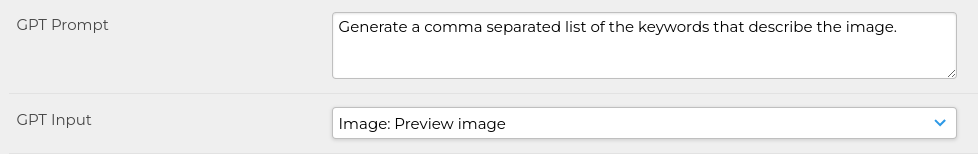

What keywords would GPT-4 suggest for the same image? Again we configure a metadata field with a prompt requesting keywords:

While the prompt I used was relatively broad, its flexibility allows for customization to fit specific scenarios. For instance, by directing GPT to focus on particular elements within a photo, such as a company’s products, we can tailor the output to more specialised needs. Alternatively, providing GPT with a predefined list of keywords could streamline the process, guiding it to select from a curated set rather than generating keywords independently. This adaptability underscores the potential of GPT to be fine-tuned for a wide range of use cases.

Here’s how that prompt worked for the test image:

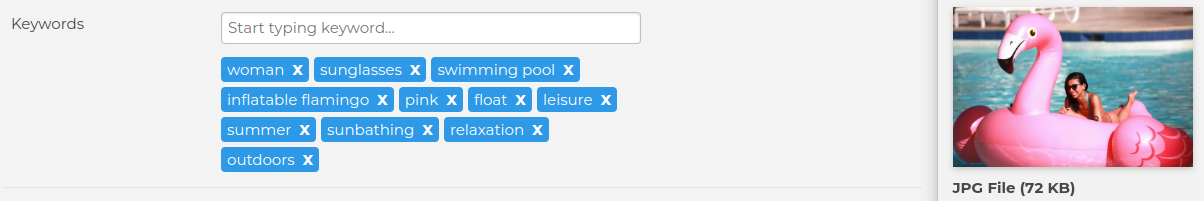

The returned keywords were: “woman, sunglasses, swimming, pool, inflatable flamingo, pink, float, leisure, summer, sunbathing, relaxation, outdoors”

In my article the simulated output was: “woman, swimsuit, inflatable flamingo, swimming pool, sunglasses, relaxation, sunshine, summer”

So, much the same, and I think you’ll agree those are very good keywords for the image, with no issues or false matches / hallucinations at all.

For comparison with earlier technologies here’s the output from Google Vision, which is popular with our customers.

This output seems to be somewhat useful, yet the unexpected inclusion of terms like “organ” and “automotive design,” along with the notable absence of “flamingo,” is perplexing. These discrepancies highlight the superior capabilities of OpenAI’s GPT, which not only demonstrates more accurate and relevant keyword generation but also suggests its potential to quickly outpace Google Vision in this domain. Given these advancements, it wouldn’t be surprising to see Google announce an update or even a new successor to Google Vision in the near future to stay competitive in this rapidly evolving field.

Marketing Copy

This is where it gets more interesting. The integration of multimodal support into DAM systems has the potential to revolutionise the way marketing teams create and repurpose content and could significantly speed up the content creation process.

Again we’ll set up a new metadata field, this time called “Marketing copy”, and ask GPT to populate it with some text based on the uploaded image.

Here’s the output for our test image.

That text in full is: “Dive into summer with style and make a splash with our fabulous Giant Pink Flamingo Pool Float! Perfect for pool parties, beach days, and vacation fun, this trendy inflatable is your ticket to unbeatable relaxation and Instagram-worthy moments. Crafted with durable, high-quality materials, it’s designed to hold up for endless hours of lounging under the sun. Its generous size comfortably accommodates adults, ensuring you can float in luxury. Don’t just float—flaunt with our Giant Pink Flamingo Pool Float and be the envy of every poolside gathering! Get yours today and let the good times drift in.”

It’s quite remarkable how the AI not only generated a detailed description but also coined a catchy slogan: “Don’t just float—flaunt.”. While I would advise marketing teams to refine and customise this AI-generated content to suit their specific needs, it undeniably serves as a solid foundation for crafting engaging real-world copy. This level of creativity and precision from an AI system is not only impressive but also indicative of its potential as a valuable tool in content creation.

My earlier simulation, when giving GPT a hand-created textual description of the image, was:

“Make a splash this summer with our vibrant, oversized inflatable flamingo! Experience the ultimate relaxation as you float effortlessly on crystal-clear pool waters, just like the happy sunseeker in this photo. Perfect for pool parties, beach getaways, or simply soaking up the sun in style, our giant flamingo ensures that you’ll be the envy of everyone. Grab your shades, slip into your favourite swimsuit, and get ready to create unforgettable memories on this fabulous floating flamingo!”

So, not too dissimilar from the actual output. I think we can conclude that GPT-4 multimodal support meets or even exceeds my expectations across all three examples above, and should certainly be suitable for real world use once the daily 100 image limit has been lifted.

As of now, we’ve not yet explored other promising avenues discussed in my earlier article, notably bespoke asset recommendations, content moderation, and creative support. Bespoke asset recommendations could transform user engagement with DAM systems, providing personalised suggestions that align with individual requirements or previous interactions. Content moderation, facilitated by GPT-4, has the potential for more refined and effective filtering, adeptly identifying and excluding irrelevant or unsuitable content. Regarding creative support, the prospects are extensive – from assisting in the generation of novel ideas (as we saw in the example above) to providing input on design and layout enhancements. We’re actively developing these aspects within our existing integration and these topics may warrant a further article or two.

It’s evident that GPT-4’s multimodal capabilities bring a substantial new tool to the world of DAM systems. This isn’t just about technology for technology’s sake; it’s about practical, real-world applications. The accuracy in image description, keyword suggestion, and even the creation of marketing copy is impressive, but what’s even more exciting is the potential for future developments.

We’re just scratching the surface with what AI can do in this space. While there are limitations, such as the hallucination that we saw a little of above – the future possibilities, from enhanced asset recommendations to improved content moderation, are promising. As we continue to test and integrate these features, it’s clear that the journey with AI in digital asset management is just beginning.

About Dan Huby

Dan Huby is Chief Technology Officer at Montala Limited, the company behind open source DAM software ResourceSpace. Thanks to Claudio Scott for the use of his photograph via Pixabay.

You can connect with Dan via his LinkedIn profile.

Share this Article: