5 Questions about AI to a CPO: Kevin Souers, Aprimo

This feature article is part one of a series contributed by Paul Melcher, visual technology expert and founder of online magazine Kaptur.

In the second of a series on DAM vendors and AI deployment, Kevin Souers, CPO, gives an overview of how Aprimo develops and deploys AI technologies in its solution. Aprimo is one of the few companies that has fully embraced generative AI, offering a variety of integrated solutions, like background removal or semantic search, aimed at streamlining workflows and enhancing user experience.

1. Aprimo is one of the first DAM vendors to implement generative AI solutions like background replacement and semantic search. What motivated you to implement these AI solutions when many others seem to be holding back?

Aprimo has a strong history of leading the DAM industry in AI adoption, starting with visual smart tagging nearly a decade ago. Our decision to aggressively implement generative AI solutions was driven by the aim to significantly increase organizational efficiency, ROI, and the ability to delivery on omnichannel personalization for our clients, making their content operations more efficient and impactful. We balance that with the need to provide reliable, scalable, and secure technology to our customers, many of whom are in heavily regulated industries. We prioritize governance to ensure that these powerful new tools are used safely and effectively, preventing issues like “Shadow IT” and ensuring compliance.

2. Do you have customers currently using Aprimo’s generative AI-powered solutions? Can you share who they are and how they are using these solutions? If not, can you share the feedback from testers?

Yes. Many of the world’s largest companies have embraced our vision, tested early versions in the first half of 2023, and are now utilizing these solutions to achieve previously unattainable results. Early on, Aprimo identified foundational generative AI services for investment that we are now able to build new capabilities on rapidly. This forward-thinking approach has proven successful as the market and use cases have evolved. We’ve now added to those capabilities with our announcement last week of the acquisition of Personify XP, which uses machine learning to identify the ideal personalized digital experiences and underlying content for known and anonymous visitors to digital properties. This groundbreaking technology, already used by leading brands, consistently delivers remarkable ROI through increased revenue and significant KPI improvements. We will be sharing more information with our customers on this acquisition in the coming weeks and how it will further enhance the strategic value of the DAM category.

3. You are releasing AI solutions one by one. How do you decide which solution to release first? What criteria do you use to prioritize these implementations?

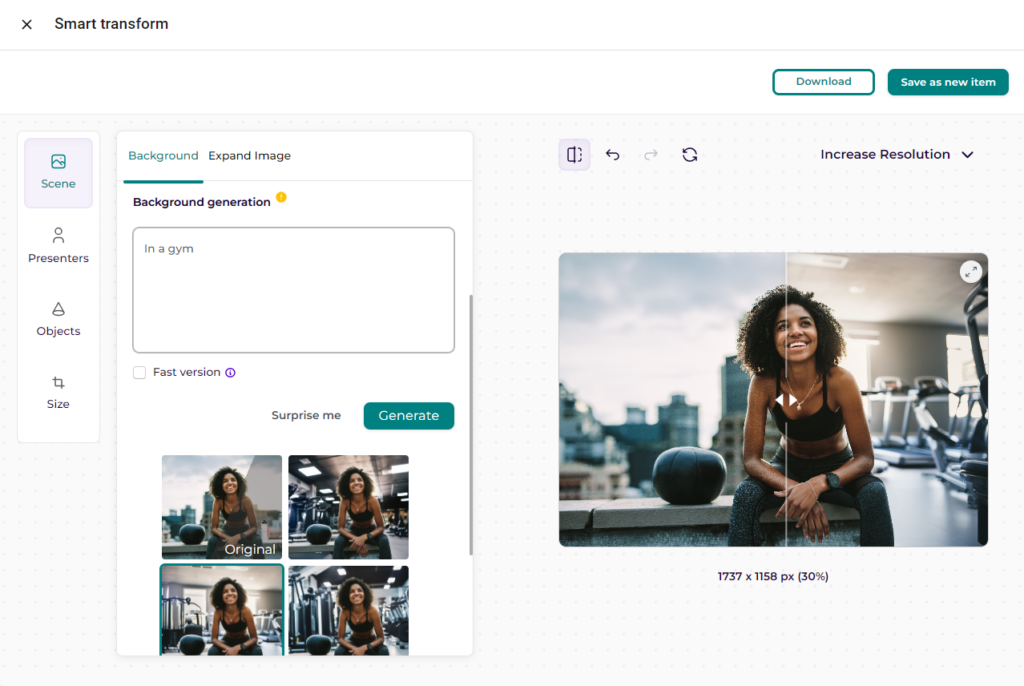

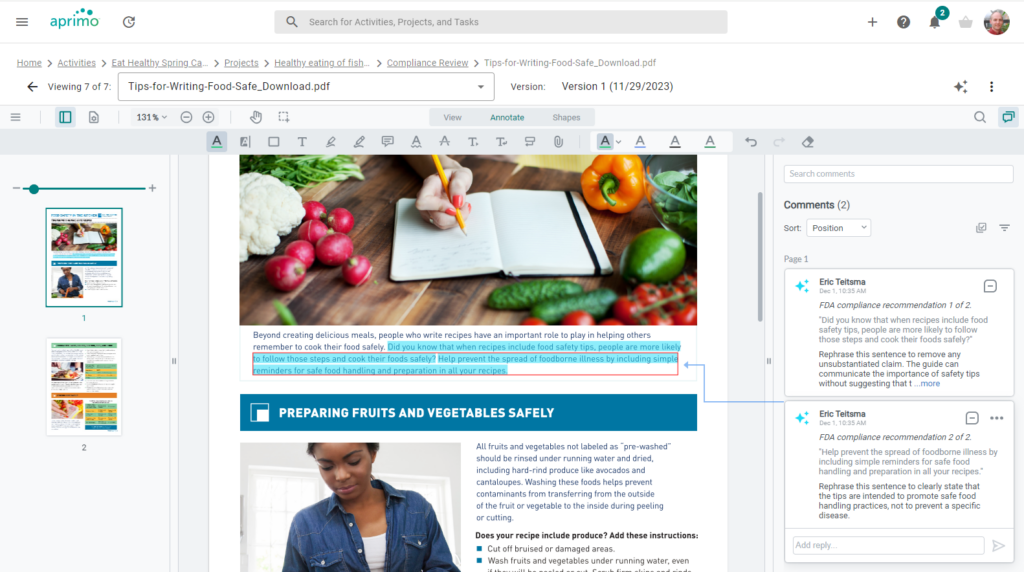

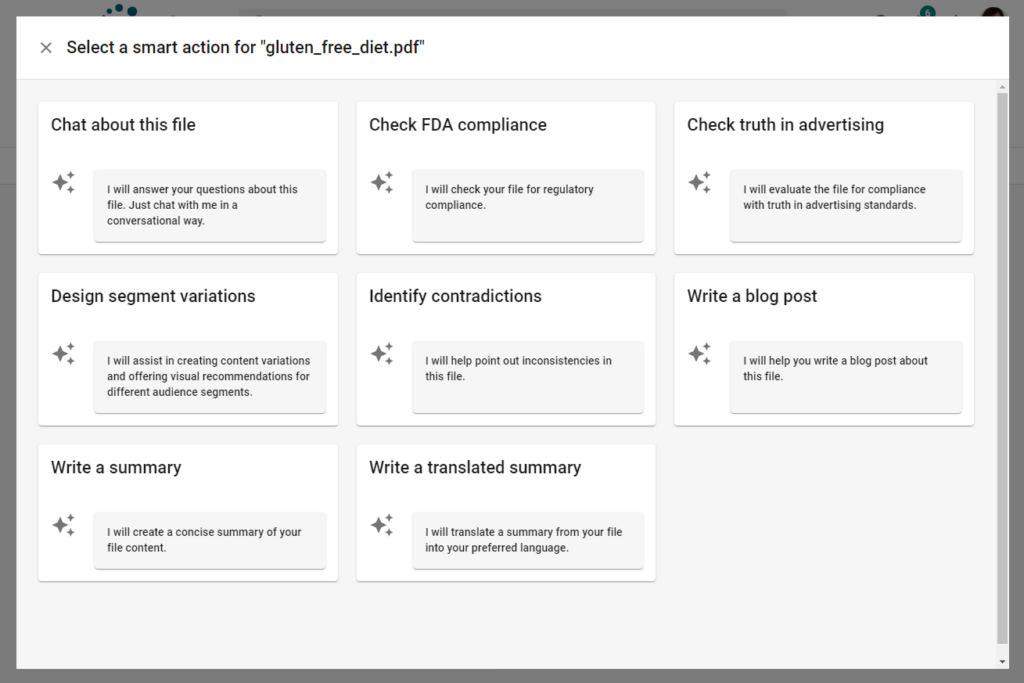

Aprimo has a long and successful track record of AI innovation, including the use of computer vision, machine learning, AI-powered process automation, and more – going back many years. To that, we added a next level of generative AI capabilities in 2023, which are now being combined in various ways driven by collaboration with our fastest-moving customers. We prioritize feature implementation based on immediate customer needs and potential impact. For example, this quarter we are building reusable features that combine our Rules engine with an embedded visual genAI model to help a leading retail customer automatically standardize the backgrounds of 100,000’s of product images for consistent ecommerce presentation. Another feature that is released but still going through iterative development that applies AI-generated metadata upon upload to automate content routing followed by customer-configured AI-powered first-pass contextual reviews (e.g., brand voice, compliance, claims, AI Detection) that will kick back unacceptable content to the originator with guidance, allowing down-stream human intervention only when it adds the most value later in the process.

4. Are you developing AI models internally, or are you using open-source or licensed options? Can you explain why you chose that approach, who you partnered with, and why? Is this a long-term strategy?

We are leveraging a combination of best-fit approaches across different types of AI – machine learning, visual models, predictive, large language models, and others. Some of these are proprietary, and others take foundational models from trusted sources, then train them for specific use cases or customer data sets. We keep any use or training of models within customer tenants to ensure customer-specific data privacy without sending training data back to the foundational model. Our primary textual model is GPT-4, procured via Microsoft Azure, ensuring robust IP protection and compliance, especially important for our customers in regulated industries and our FedRAMP offering. Governance considerations are also important for visual models, in order to mitigate IP risks and align with our commitment to ethical AI. This approach not only ensures technology excellence but also legal and brand protection, proving to be a wise decision for our global customers.

5. What are the limits of integrating AI in a DAM? For example, some argue that providing editing tools in a DAM exceeds what a DAM should offer. What are your boundaries for AI integration?

I love this question. The debate on what editing tools should be in a DAM is now turned on its head. The primary job of the DAM is to provide easily-findable, easily-leveraged, compliant content; facilitate efficient reuse of that content; help customers leverage modular content; and ultimately orchestrate the creation of localized and/or personalized variants for specific customer experiences and segments. While DAMs do many things, this core use case is crucial.

In the past, the adoption of embedded editing tools by individual business users has been low, as they often prefer more sophisticated desktop creative apps or just to rely on creative teams. However, the game has changed with AI-powered capabilities that can be invoked at scale FOR users rather than BY users. This systematic enterprise-level usage is how companies achieve the 30-50% capacity increases analysts project with the generative AI evolution. For example, content localization and editing will be rule-based, making the materials available for download without the need for users to handle templates manually. The traditional template technology, which has been central to self-service localized content generation for the past decade, is likely to become obsolete within a year due to these new tools.

As we transition, we’ll still cater to power users who wish to further edit content after it has been automatically generated, serving the industrious DIY segment who show remarkable passion for content creation. This balanced approach ensures that we continue to support all our users while leveraging the full potential of generative AI to drive efficiency and innovation.

About Paul Melcher

Paul has over 20 years’ experience working in the visual technology sector, and is the Founder and Director of Melcher System LLC. He also has extensive expertise in generative AI, image recognition and content licensing. You can discover the latest news, technologies and trends in the visual sector via his online magazine Kaptur.

You can connect with Paul via his LinkedIn profile.

Share this Article: