5 Questions about AI to a CTO: Oliver Grenet, Wedia

This feature article is part one of a series contributed by Paul Melcher, visual technology expert and founder of online magazine Kaptur.

In today’s rapidly evolving digital landscape, the integration of generative AI into Digital Asset Management systems is transforming the way we handle and utilize visual content. To demystify the intersection of AI and DAM, we are launching a series of interviews with the CTOs and CPOs of leading DAM companies. Our goal is to delve into their experiences, challenges, and successes in implementing generative AI solutions.

Throughout this series, we will ask each leader the same five questions, aiming to uncover diverse perspectives and innovative approaches. We kick off our series with Oliver Grenet, CTO at Wedia, who shares his insights on how Wedia is pioneering the use of generative AI to enhance their DAM capabilities.

1. Wedia is one of the first DAM vendors to implement generative AI solutions like background replacement and semantic search. What motivated you to implement these AI solutions when many others seem to be holding back?

At Wedia, we have always considered AI to be a highly relevant technology for image and video processing. Since the launch of our DAM, we have invested in a dedicated team of data scientists and AI engineers who constantly work on these topics through POCs and gradual integration into our solution. This expertise has allowed us to closely follow the evolution of image-related models and navigate through phases of disillusionment and progress.

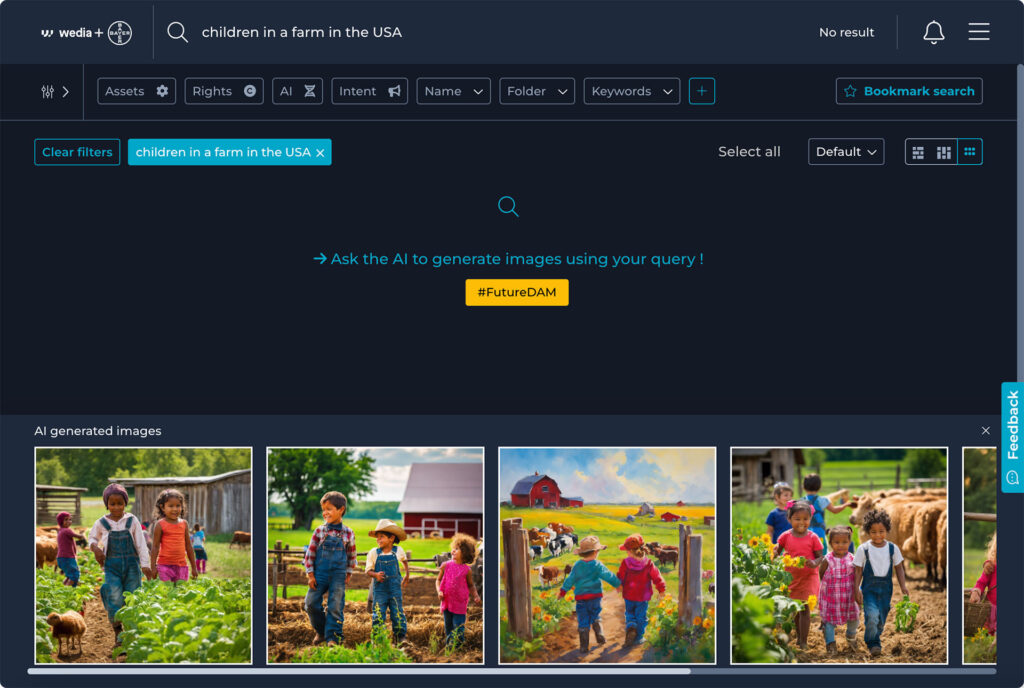

Our clients have been very eager for the integration of generative AI into the DAM since the popularization of Midjourney and other models. And, since we had ongoing discussions with them about these topics, the technical team immediately integrated this demand into their work, with real use cases provided by the clients to go beyond the demo stage and respond with a GenAI that addresses real business problems.

2. Do you have customers currently using Wedia’s generative AI-powered solutions? Can you share who they are and how they are using these solutions? If not, can you share the feedback from testers?

Currently, we offer a test version on a separate branch of Wedia that integrates all our advances in generative AI. This version is accessible to some of our clients, although I cannot name them explicitly. However, I can share the feedback we have received so far.

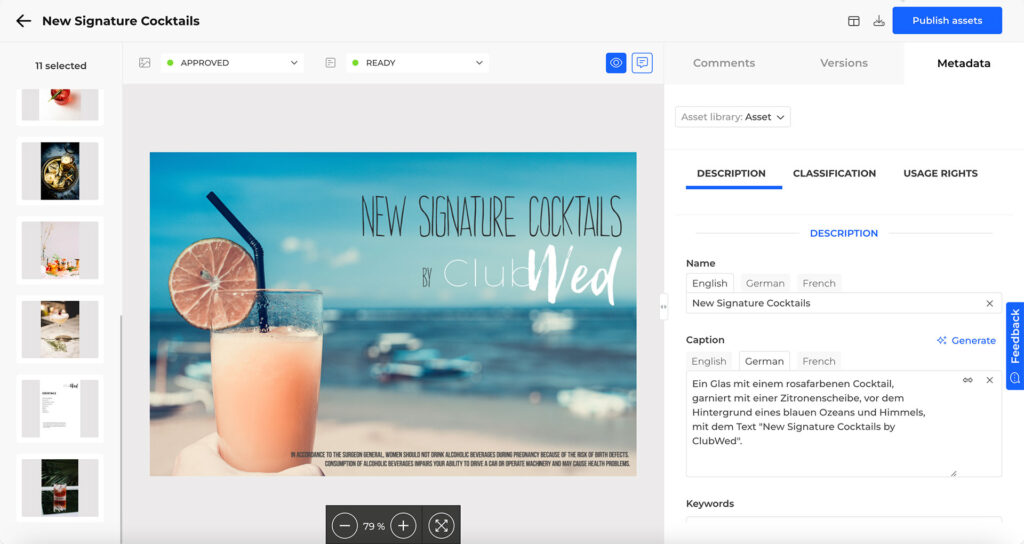

First of all, we have put a lot of effort into improving image rendering. The initial models generated very synthetic and almost “3D” renders, which did not meet our clients’ expectations. Therefore, we worked to restore the colorimetry and adherence to our clients’ graphic and photographic guidelines to match their visual identity as closely as possible. This was our first major challenge.

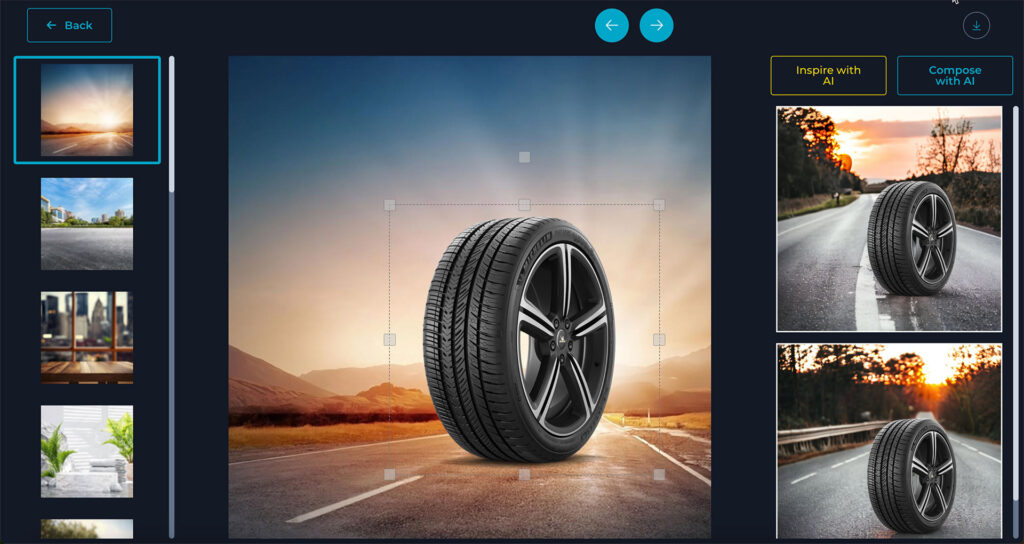

The second challenge, requested by our clients, was to generate images for specific needs rather than in a general manner. We moved beyond the simple prompt stage by integrating generative AI into business processes. For example, creating local versions of an image from a “global” kit, or placing products in pre-approved backgrounds by the brand, rather than generating them randomly from a prompt.

3. You are releasing AI solutions one by one. How do you decide which solution to release first? What criteria do you use to prioritize these implementations?

We have completely evolved our approach. Initially, we explored all possible areas of AI, but now we have adopted a much more structured method for deciding which solutions to implement first.

First, we conduct POCs (Proof of Concept) on specific business needs reported by our clients to validate the technical feasibility of the solution. Once feasibility is validated, we present these POCs to clients for testing. It is only after confirming that the features meet a real business need that we move on to the ‘discovery’ phase.

This discovery phase includes an in-depth exploration of all necessary research areas, such as user interface, user experience, and workflow integration. We create prototypes that we present to our clients to ensure that generative AI is integrated intelligently and intuitively into their business processes.

4. Are you developing AI models internally, or are you using open-source or licensed options? Can you explain why you chose that approach, who you partnered with, and why? Is this a long-term strategy?

I’m going to share some trade secrets here :) We have adopted a hybrid method that combines the use of proprietary models and open-source models.

To address specific business challenges, we have developed proprietary models. These models are trained on Wedia’s internal datasets, not on client data, to solve very particular problems.

We combine these “internal” models with open-source and commercial models. We have worked with our legal team to carefully select providers that guarantee complete confidentiality of our clients’ data. It is essential for us that our clients’ data is never used for training general models.

We have selected Anthropic as a global AI provider. Their cloud model ensures complete data confidentiality. By using Anthropic’s solutions, we guarantee our clients that their data is used exclusively for their needs, never being integrated into general training models. Moreover, Anthropic respects data localization zones, allowing us to comply with GDPR requirements.

5. What are the limits of integrating AI in a DAM? For example, some argue that providing editing tools in a DAM exceeds what a DAM should offer. What are your boundaries for AI integration?

I believe that those making such an argument can only envision a basic integration of DALL-E, Firefly, or Midjourney, without adding any customization or value. Once again, we integrate tools with the goal of enabling an existing business process, replacing a manual or external action with GenAI. This is what will make the difference in the long run and its legitimacy: the quality of integration into a DAM.

About Paul Melcher

Paul has over 20 years’ experience working in the visual technology sector, and is the Founder and Director of Melcher System LLC. He also has extensive expertise in generative AI, image recognition and content licensing. You can discover the latest news, technologies and trends in the visual sector via his online magazine Kaptur.

You can connect with Paul via his LinkedIn profile.

Share this Article: