The Implications of Generative AI for Digital Asset Management

This feature article was written by Ralph Windsor, Editor of DAM News and Project Director of DAM Consultants, Daydream.

In this article, I intend to consider the implications of Generative AI (sometimes referred to as ‘Synthetic Content’) for Digital Asset Management. First, some background to the topic for the benefit of those who have yet to encounter it.

Generative AI allows the creation of new content using text descriptions, existing images, video or audio. AI algorithms are used to identify underlying patterns in source material and (in combination with their own interpretations) produce a unique artwork that is unique and representational in-nature. The selection of the sources could be explicit (i.e. an asset is directly provided). Alternatively, they might be inferred from a text description which acts like a specification or brief.

One fairly well-known example of this technology which has been around a few years is the generation of faces of fake people, such as: This Person Does Not Exist. In reality, it is not that the person does not exist, more that the algorithm has synthesized characteristics from hundreds of thousands of different faces to produce one where human beings cannot identify the individual source photos. Another example is the ability to render a photo so that it looks like a painting.

These are interesting, but quite narrow in terms of their scope and potential use. One of the recent demonstrations of Generative AI is an approach where the user enters a text description of what they require and then the result is generated there and then. As well as photos and artwork; videos, text, audio and even basic computer games have been created. Some popular examples of tools which enable this sort of functionality include DALL-E, Midjourney, Stable Diffusion and Bria (who integrate with specifically with DAM systems).

How Might This Be Used for DAM?

The use of keyword-based interfaces to drive Generative AI invites some comparison with DAM technology because the approach is obviously a familiar one for DAM users when they search for assets. The current use-case for DAM has not altered a great deal in the last 30 years since the first systems began to emerge around 1990. Probably the single largest change thus far has been the removal of the digitisation stage, where analogue material previously needed to be converted to digital using scanners etc. before it could be ingested into the DAM.

In 2022, nearly every asset is ‘born digital’, i.e. created using a digital device or on a computer to start with. Yet, despite this, even without the digitisation process, a physical/analogue source is still often required, especially for richer media like images and videos which are the mainstays of most DAM systems. Generative AI takes the rationalisation process one step further so that (theoretically) there is no source which physically exists in the real world to act as the starting point.

This places Generative AI (at least partly) in the realm of creative tools like Photoshop. The difference is that no production or creative skills are required to produce commercially usable results. As I write, Microsoft have announced that Bing, Microsoft 365 and Edge will now offer an option to generate media from a user’s search query using DALL-E 2. My expectation is that Google, Adobe (and others) will quickly follow suit with similar announcements and integrations of their own. The implication is that the need to go outside the application you are currently using to source production quality media is now reduced.

Metadata – Before the Fact Rather Than the Afterthought

One particularly interesting aspect of Generative AI and its relationship with DAM concerns the role of metadata in all this. The definition of digital assets which I subscribe to is that they are a combination of essences (i.e. binary data or intrinsic value) and metadata (extrinsic value). That still holds true in the context of Generative AI, but the role of metadata becomes more significant. When most users talk about ‘digital assets’, they tend to think in terms of the essences. For example if you ask someone to download a digital asset, this generally implies that only the file will be retrieved, not the metadata.

Those lacking much real-world experience of managing digital assets will tend to discount the value of metadata because they mistakenly believe its role is a subservient one and it only exists for some narrow task like search. With Generative AI, however, the metadata becomes like the DNA to create an asset’s essence. As such, metadata could be before the fact, rather than after it.

The need for a DAM as some huge repository of terabytes or petabytes of data (which has been the trend for DAM in the last 30 years) diminishes since fewer materials needs to be retained if asset essences were created using Generative AI – or ‘born generative’ as one might refer to it. While there will probably never be a scenario where there are no archived assets at all in DAMs, Generative AI potentially impacts one of the big fundamental trends in-play in DAM which has driven their adoption thus far – the ever increasing volume of binary data that has to be stored, catalogued and archived.

The On-going Need for Archives and Human Generated Assets

The previous discussion raises some intriguing possible outcomes. Nonetheless, a consistent trend with new technologies (and AI ones in particular) is where the promoters select examples of where their tools produce highly optimised results and then use these for marketing purposes. Based on these, prospective users extrapolate the results to assume they be equally effective for their own purpose. This often turns out not to be the case, especially with earlier iterations of the technology. I suspect this might occur initially with Generative AI also. The initial premise will be that ‘anything is possible’, but then nuances and exceptions will emerge where it transpires the algorithm’s success is more successful with some concepts and subjects than it is with others.

I anticipate that artists with photo re-touching skills might find themselves to be in increased demand to meet a need for media which does not have the signs it has been produced by generative AI, or where the generated media is nearly usable but still needs the ‘human touch’. As I will discuss later, basic re-touching tasks which could be carried out with minimal Photoshop skills may get carried out by the algos, but a significant minority may still need further human intervention.

These modified variants will all need to be stored somewhere, as such, any expectation that Generative AI will precipitate the demise of manually catalogued and stored archives of digital assets is probably (at best) premature.

The Implications for the DAM Market

One point that does seem obvious is that if DAM vendors do not integrate with Generative AI and fail to anticipate its uptake among their users, the scope of their solutions will be more limited than would have otherwise been the case.

At present, DAMs act as a kind of ‘media hub’ because they are the only solution that can effectively connect metadata from across the business to digital essences. In an era of Generative AI, there are other competing technologies that could fulfil that role (the Microsoft announcement referred to earlier is a case in point). Generative AI could be the catalyst for a convergence trend where DAM and creative tools compete with each other to mediate access to this new unified source of archived and (what might be termed) ‘just in time’ digital assets.

One of the example use-cases for Generative AI is to modify an existing image (or video). At present, the manipulation of media files within DAMs is fairly limited. There are some options to do things like cropping, re-sizing, flipping etc. but the tools are basic. With Generative AI, a text interface can be used to instruct the DAM how the user would like to change an existing image in the archive. These derivatives do not need to be stored, they can be rendered dynamically.

To offer a relatively simple example of this: the photo shoot for a brand’s commercial might ordinarily need to be taken when the weather is sunny rather than cloudy. The celebrity who appears in it, however, has a very busy schedule and the shoot has to be taken on a specific day, even though the weather conditions were not ideal. This sort of issue can be dealt with by getting creative personnel to manually re-touch images. This is relatively simple to achieve in Photoshop (and similar applications) but a DAM system is usually not sophisticated enough to support this sort of direct manipulation. Currently, the original asset has to be uploaded, catalogued, downloaded to a creative tool, retouched and re-uploaded as a separate asset. This increases the management overhead since an association between the two assets (original and modified) has to be made, metadata changes need to be applied twice etc. Further there is the risk of the two becoming separated from each other if the cataloguing task is not carried out diligently.

For a DAM connected directly to a Generative AI tool this sort of change is (theoretically) quite trivial. The adjustments can be initiated (within the DAM itself) using a text interface, e.g. ‘change this scene so it is a sunny day with blue sky’. Further, the fact they were carried out can be automatically logged so there is full transparency about how the asset got changed, by whom and when.

These are basic, day-to-day graphic production tasks which currently require a moderately skilled human being to carry out. There are other more intriguing creative opportunities for DAM which hitherto have not been very well explored because the technical challenges were previously considered too demanding.

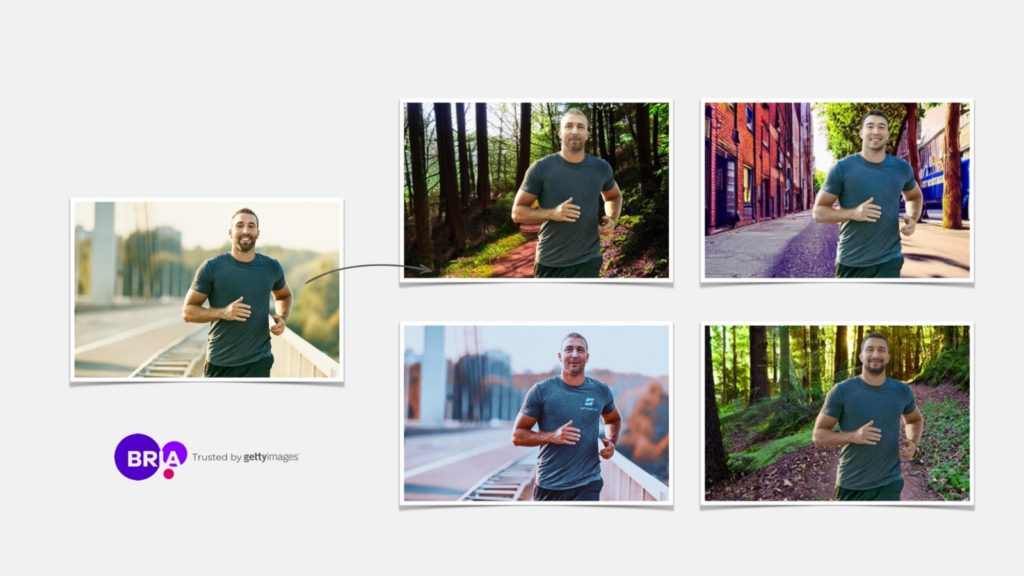

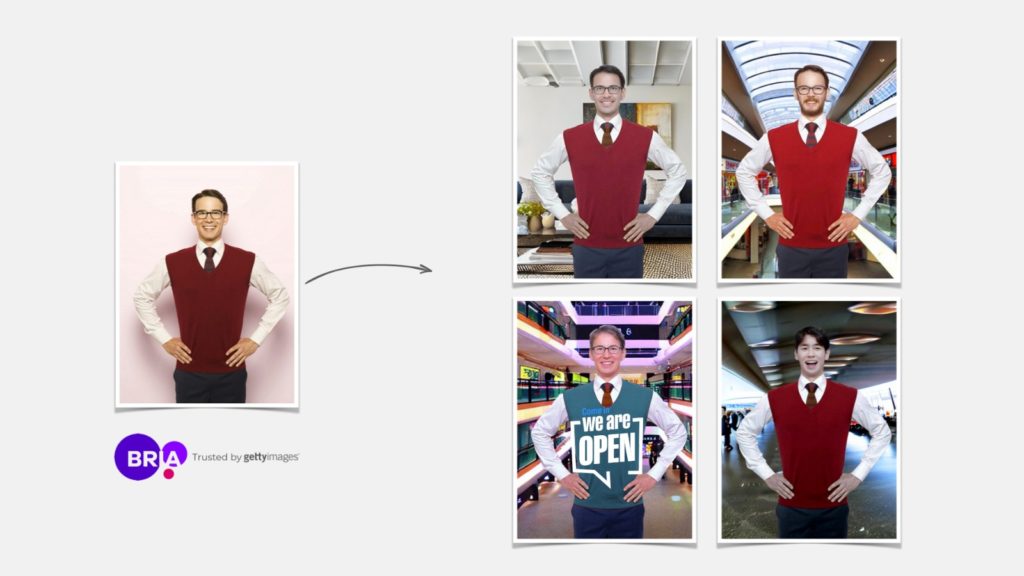

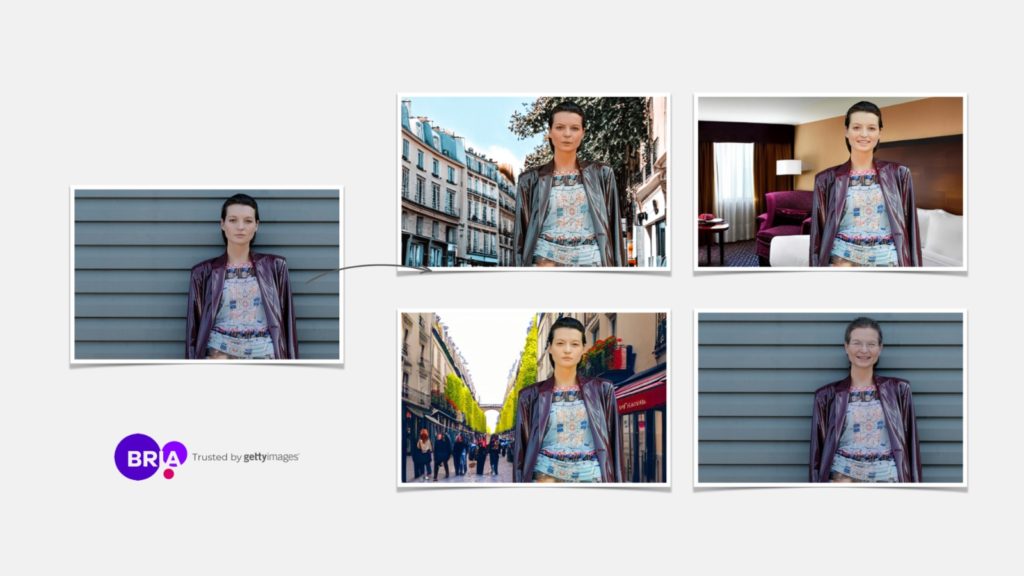

The opportunity for DAM developers is to offer users tools that extend far beyond what Photoshop can offer (at least currently). This is more than simplistic tasks like erasing a scratch here and there or adjusting skin tones. Generative AI tools allow users to repurpose entire photo shoots with simplicity and ease. For example: a company has a photo shoot in their DAM with one model showcasing a product. Tomorrow, they decide to enter a new market. With Generative AI, they can change the ethnicity of the model and the background of the entire photo shoot in a couple of clicks and minutes – with no required skills.

There is no need to hire new models, a new photographer, a new studio, do editing, etc., all of which would cost time and resources. The savings, especially in these times of recession, are invaluable for a company. With this feature alone, it could cover the cost of the DAM. And they can repeat this over and over and over.

I asked Yair Adato, CEO of Bria what he saw as the potential benefits of Generative AI for the DAM market:

Solutions based on Generative AI will simplify and speed up the process of finding, customising and generating digital content. This will allow more users to create or modify digital assets, possibly inside the DAM itself. It will unlock automation tools that are not possible today. DAMs equipped with Generative AI will become even more important in the digital transformations of organisations, increasing the value of existing digital assets and automating processes on a large scale.

As well as the images below, some examples of Bria in action can be seen in this video. These should give DAM vendors and users some idea of the capabilities of this technology and how it might be applied.

Example images provided by Bria.

Asset Provenance and Digital Ethics

One area where a DAM’s archival capabilities become more important in the era of Generative AI relates to the provenance of the raw materials used to create imagery. As previously discussed, many photo-realistic synthetically generated images are derived from existing archived materials. This is particularly the case with subjects like human faces, but others which have rapidly become the visual clichés of synthetic media, such as dogs living in houses made of sushi.

There are all kinds of copyright and moral rights issues which will result from this and a possible scenario is that originators of synthetic media are required to disclose where they got their raw materials from (and if they were properly cleared for use). Currently, many synthetic images are generated from media which have been derived from online sources, such as social media. By contrast, archival-oriented DAMs (of the type available now) offer a method to ensure that media has been legitimately sourced with auditing information to back the assertions up.

It seems inconceivable that legislation will not be drafted to regulate Generative AI. One possibility is that both the generated image and any raw materials used to derive it are marked using one of the available invisible watermarking technologies. If this links back to the DAM, there is visibility across the Digital Asset Supply Chain for AI-generated visual content. In order to offer this, DAMs will need to support these tools to enable tracking outside the DAM. Up until now, this has been a ‘nice to have’ feature, but it might become an essential one to help organisations protect their brands and also shield them from any opportunistic litigation.

Recent contributors to DAM News have discussed the legal risks relating to the use of AI facial recognition technology. Similar challenges may present themselves with Generative AI also. The risk of using synthetically generated content (especially where it is derived from other photos) could dissuade some enterprises from adopting it. The most practical way to limit that risk that would be to have complete transparency about the origins of any sources employed.

The Digital Asset Supply Chain is a concept which has grown in popularity among DAM users because it presents a compelling case for leveraging more ROI out of DAMs by connecting them to other systems in use across an organisation. With Generative AI, it becomes even more of a priority because of any potential disclosure obligations which enterprises are required to meet.

To operate cost-effectively in a far more regulated environment, DAMs which use Generative AI may need to depend on digital infrastructure technologies like blockchains and identification verification solutions such as invisible watermarking. Without these, individual DAM providers would have to provide their own means of logging and tracking all this activity – and many will lack the resources to do so.

At present, there are no widely adopted dedicated media-oriented digital asset blockchains in the enterprise DAM market, but this is not to say they might not become more popular. I note also that while many might not find them to their taste, the blockchain technologies which underpins the asset identifiers of visual assets used with NFTs (Non-Fungible Tokens) would work surprisingly well in the context of enterprise DAM where the financial value and storage location of the asset could become less of a critical factor than permanent records about their provenance.

For anyone not sure of why this is important, imagine a scenario where a request is made to generate a synthetic image via the DAM. The system makes the decision to utilise an image outside the archive as part of the resulting composition. The generated image is widely used for marketing and promotion purposes. Subsequently it is found to be implicated in something scandalous or unpalatable (e.g. a shot of a concentration camp used for a holiday marketing campaign). Without being able to see exactly where the materials came from, these risks are quite hard to fully mitigate against.

It is true that this sort of issue can happen with conventional material shot by photographers (or where stock imagery is used). The difference is liability and accountability. A human being had to make the decision to shoot a scene or use an archived image. Generative AI images are created partially using components selected by an algorithm using semi-opaque rules defined by teams of software developers.

The picture is further complicated by the fact that Generative AI algorithms may use a mixture of existing assets and fully-artificial compositions, within the same piece. The fact that only a tiny fraction of the overall artwork is from a dubious source is still sufficient to create a potential PR disaster for the firm who used it in their marketing campaigns.

For Generative AI to be trusted, it must be possible to see precisely where it got its digital raw materials from (i.e. the digital asset supply chain) and a full audit of what decisions were made by whom and when – especially if they were automated ones.

It should be pointed out that these issues are far from unique to Generative AI, at least in respect of its relationship with Digital Asset Management. There is a compelling need to provide this level of transparency across digital asset supply chains and currently it is not being particularly well addressed by the majority of tools on offer.

Conclusion

Based on the brief analysis I have conducted in this article, it seems inconceivable that Generative AI will not have a significant impact on the Digital Asset Management market (as is true elsewhere). In this regard, there are a complex mix of both threats and opportunities which require careful evaluation.

Previous AI innovations which the DAM industry has effectively out-sourced to third parties (e.g. image recognition) have generally been unsuccessful in terms of their widespread adoption. As such, those vendors who expect to be able to plug into a third party Generative AI tool but without properly considering the full extent of the opportunity they have to offer are likely to see generic and poor quality results which many of their users will not wish to use. Those with the imagination and ability to skilfully apply the creative possibilities to their products, however, will be able to gain a competitive advantage over their peers.

The DAM software market has produced a quite limited and narrow range of innovations over the last 30 years (and the last 15 have been especially barren). Generative AI could provide the stimulus needed to kick start some much needed impetus to the sector, but it must be willing to take the appropriate calculated risks in order to do so. If it does not, it might find itself increasingly marginalised as a safe and boring technology, but ultimately irrelevant and of limited usefulness to the needs of future cohorts of users.

This feature article was written by Ralph Windsor, Editor of DAM News and Project Director of DAM Consultants, Daydream. It was first published on DAM News 26th October 2022. Images of Generative AI tools are Copyright Bria.

Share this Article:

Excellent article. I also think there will be divergence in DAM tools. Some will be creative production oriented and others will be about locking down what you can use, distribution and compliance. It will depend on the brand and industry.