DAM Scalability Challenges Part 1 – The Digital Asset Supply Chain

This article was written by Ralph Windsor, Editor of DAM News and Project Director of DAM Consultants, Daydream. It is part one in a series of three articles about DAM scalability, see the news post for more information.

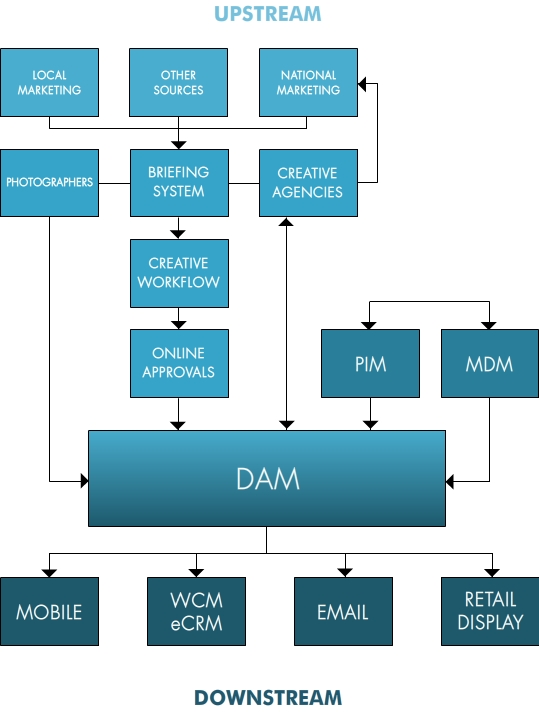

For those unaware what the term means, Digital Asset Supply Chain refers to the process by which digital materials like rich media and metadata are transformed into digital assets that are distributed to channels such as websites, social media, mobile apps etc. Contemporary DAM implementations are nearly always Digital Asset Supply Chain solutions since they involve some kind of integration either to get materials into the DAM (or ‘upstream’) or distribute digital assets to somewhere else (referred to as ‘downstream’). I have also written a longer article about this subject which covers the topic in more detail and a course is available either as part of a DAM News premium subscription or to purchase on its own.

In the past, a lot of this activity might have been carried out manually by human beings uploading or downloading to or from the DAM, but more recently there has been increasing interest in automating these processes via direct integration with other solutions. As such, integration is now considered a core feature of modern DAM technologies, almost to the same extent that something like search is.

Below is a visual representation of a typical Digital Asset Supply Chain.

When it comes to scaling Digital Asset Supply Chains, there are two major considerations:

- The number of counterparties that a DAM needs to exchange data with.

- Throughput or the amount of data that flows into and out of the DAM.

There are a number of subsidiary scalability concerns also, but in this article I shall focus on the above as this addresses the majority of supply chain scalability issues that might be encountered. I will also explain how the issues manifest themselves and what both users and vendors can do to avoid them.

Scaling Integration Counterparties

Integration counterparties refers to the range of other systems (and sometimes human beings) that the DAM connects into. More connections means greater flexibility and increased potential for efficient and automated Digital Asset Supply Chains, but it also implies more moving parts and therefore, more opportunities for things to go wrong.

If the vendor has developed an application architecture which anticipates the need for a lot of integration with ever more complex Digital Asset Supply Chains then scaling up to support them is far simpler to achieve. When bugs or technical issue are found, resolving them is likely to be less demanding as fixing one issue with one is more likely to prevent problems with another. By contrast, if a lot of connectors to different systems have been developed in an ad-hoc or haphazard manner, there is a far greater risk of unexpected conflicts and more effort required to correct faults. The upshot of this is that any Digital Asset Supply Chain which the vendor’s clients have set up will inherently lack scalability and adding new integrations could reveal a host of new (and hard to predict) problems.

When I ask vendors about their ability to integrate their DAM with a list of commonly used systems, the ones that are reluctant to commit to doing so and generally question the validity of the whole exercise are those whose DAMs probably lack scalable integration capabilities. It should also be noted, there is also a section of firms who will essentially lie through their teeth and swear blind that they can support the required functionality (when in reality they cannot) – this scenario is probably worse than the former one. With all that said, if you require a scalable Digital Asset Supply Chain (and the chances are, you do) if the vendor is evasive, reticent or uncooperative from the off then this should raise a red flag about their suitability.

A good number of vendors may use partners to extend what they may refer to as their ‘ecosystem’, or all the other technologies that can connect into their DAM. This can be a double-edged sword. On the one hand, they can scale up the scope of a Digital Asset Supply Chain because they don’t need to develop everything in-house. On the other, if the partner responsible for some component or other has not implemented it satisfactorily, this can create an integration scalability problem also; I will discuss the details of this point in the following section.

One way in which vendors can avoid these issues is to have some guidelines and perhaps even a certification or accreditation process for third party integration components to provide some kind of assurance to their end-users. To achieve this, the vendor does need a certain amount of market clout to both enforce accreditation and (more fundamentally) just to persuade third parties to develop extensions to their platform. If they are a smaller operation, this can be more difficult to achieve. As with all issues in DAM, however, just because the vendor is larger in terms of turnover etc. (or has investment of some kind to help fund their partner programme) this is no guarantee of quality or reliability and you still need to carry out due diligence and not taken anything at face value.

One other issue with scaling counterparties is that not every system that the DAM must integrate with will be a commercial product with an API and documentation to support it. This is a particular problem where digital materials are sourced from in-house systems, for example, metadata originating from ERP (Enterprise Resource Planning) or MDM (Master Data Management) systems. Integration with these upstream sources may offer very substantial benefits, but they can also present the most challenges.

Typically, many vendors will refuse to get directly involved with the implementation work for internal systems integration, except through professional services partners and resellers (or sometimes via their own professional services division for an additional cost). A far bigger challenge, however, is getting the cooperation and buy-in from the gatekeepers of the system itself – which are typically IT departments. This can be the point where technical problems escalate into political ones that may require a senior manager sponsor with the necessary influence in order to make any headway.

Many times when I have been involved in Digital Asset Supply Chain projects where an internal system was in the mix, getting access to the people who control it and past all the internal politics can prove to be a lengthy undertaking. This is not to say it isn’t achievable, but costs and timeframes can get quite stretched. If getting your DAM to talk to some internal system is a central pillar of your business case for implementing an automated Digital Asset Supply Chain, try to find out as much as you can about what might be required, ideally before you start reviewing prospective DAM vendors. Further, make sure that all the vendors involved in the selection process are made aware of what you want to do and where you see their role.

The key point to take away from the above discussion is that if the vendor simply has an API, or a large collection of connectors to a lot of other technologies (whether built themselves or using third parties) this does not necessarily mean your Digital Asset Supply Chain will scale across the entire range of counterparties that you need it to. As with many DAM scalability topics, the devil is in the detail and you either need to roll up your sleeves and get stuck in with it or hire someone else with the relevant expertise if you find the prospect of the task somewhat unappealing to tackle yourself.

Scaling Throughput

Throughput refers to the amount of data that flows between the DAM and another solution. Throughput scalability is therefore how well the DAM can deal with larger amounts of data being transferred.

Not every node on the Digital Asset Supply Chain needs to operate at maximum throughput; it depends on whether there are business benefits to scaling it up. For example if there is a requirement to transfer a few digital assets from the DAM to a social media platform say once per month then automating the process offers quite a limited ROI, even if your DAM will support automated integration. On the other hand, if users are wasting hours each day manually downloading and re-uploading upwards of hundreds of assets then the business case is far stronger.

An important issue is assessing the potential to scale rather than whether it is a current requirement right now or not. Taking the previous example, the business could decide to pursue a far more active social media marketing strategy than they once did. Where before, manually uploading a few ad-hoc digital assets was acceptable, now it is not because the scale of the task has increased and the amount of person-hours needed could make the exercise uneconomic unless automation is in place.

At this stage, throughput issues will tend to become more technical in nature. The following kinds of questions have to be asked:

- Is there a dedicated connector from the DAM to wherever we need to send/receive data?

- Is the performance of the connector satisfactory for the throughput we require?

- If not, is the performance of the API any better?

- Are there limits on how much data we can transfer?

- Does the counterparty system impose limits on us?

- What else do we need to do apart from just getting the data from A to B? Do we need to track usage?

Throughput scalability is quite hard to benchmark and test, especially prior to making a purchasing decision. Vendors are quite keen to tell DAM users how many technologies they integrate with, but their scalability (or lack thereof) is less widely publicised. This means that two different products could be advertised as enabling integration between the DAM and another technology, but one of them might operate less efficiently than another and therefore not scale to the extent the business requires. These are issues which will require some in-depth testing to be carried out quite early on to identify if they are relevant or not.

The Weakest Link

As is frequently observed about supply chains of all kinds, they are only as good as their weakest link. If your Digital Asset Supply Chain is dependent on some flaky or untested technology then you could have a hidden problem that might only reveal itself when a DAM API or connector is put under pressure and fails when you start to scale up.

A few years ago, I got asked to consult on a troubleshooting assignment for a client who had complex digital asset distribution requirements involving 30 different downstream destinations that used the assets in the client’s DAM. All of the counterparties were custom solutions, therefore, there were no dedicated connectors and everything relied on the DAM’s own API. The client had received reports that their DAM was failing from the owners of some systems, while others appeared to work without any issues. The vendor claimed the problems were with the counterparties who were reporting faults (and they, in turn, all claimed the opposite). This is a classic complex Digital Asset Supply Chain technical issue which can fairly quickly boil over into becoming a messy political one that takes up a disproportionate amount of everyone’s time to resolve.

At certain key points during the year, the client’s DAM was more heavily used than others. Some systems made minimal use of the API, whereas others depended on it as a primary data source. After some forensic analysis of the fault reports, it became clear that the problems were more frequently encountered during periods when there was high demand for digital assets. Further investigation of the log reports from both the DAM and some of the other systems indicated that the problem was due to the poor scalability of the vendor’s API. If the number of API requests was low, all was well, but when they increased, faults became more frequent. The issue was not with the other systems, it was the DAM. Some basic scalability mistakes had been made in the design and implementation of the API architecture. Myself and a colleague also found another unreported security problem where responses to one request were delivered to another, because of this same underlying scalability issue.

Once identified and proven as within the scope of their responsibility, the vendor was suitably contrite and swiftly developed a patch which resolved this issue. With that said, a lot of time had been wasted checking other systems, paying for consultants, downtime etc. not to mention shaken user confidence and faith in the stability and efficacy of the DAM. This was negative for all the stakeholders, especially the vendor. The client had plans to use their DAM across the business but as a result of this incident, decided that the platform was possibly not as good a long-term choice as they once believe it to be. In truth, it was probably less about the product than it was the initially arrogant and evasive attitude of the vendor when a serious problem with the scalability of their integration features was presented to them.

For end-users, this raises an important point when it comes to evaluating DAMs. It is not sufficient to simply ask if the vendor has an API or a connector to the technology you need to integrate with, but you need to check how robust and scalable it is, in operation also.

As an end-user of a DAM solution, it is important to make a realistic risk assessment of how likely it is that you will need to scale up the amount of data that will flow into or out of your DAM and what the implications would be if something went wrong. Further, you also have to acknowledge that your needs might change. For example, you may think you only need to transfer a small number of assets between your DAM and WCMS (Web Content Management System) each day, however, if the latter then gets used to drive something like an in-store display system, that number could increase exponentially.

It’s hard to predict with complete accuracy how integration needs might wax or wane over the lifetime of the DAM. This is analogous to the way that predicting sales figures is also something of an inexact science also. The way most businesses deal with this issue is to have a risk management strategy and capacity to scale up (or down) according to how the facts reveal themselves over time. As they saying goes, hope is not a strategy. Equally, however, you cannot make a 100% accurate prediction for how your Digital Asset Supply Chain will need to scale over time, you need a plan that covers as many potential eventualities as possible.

For vendors, there are several different, but related, challenges. Firstly, there are increasing demands for a wide range of connectors to different third party applications from end-users. At the same time, if these components do not scale up in terms of their capacity to handle lots of requests then this can lead to some unfortunate consequences which may damage the confidence of the client in your products (and your capacity to support them adequately).

I have come across quite a number of vendors who try and manage this problem by abdicating responsibility for integration and requiring the client to handle it all themselves so they can wash their hands of technical issues should they arise. This might seem like a neat way of side-stepping the hassle and complexity involved, however clients will generally prefer firms who will at least share this burden with them (in some form, at least) . In addition, if it subsequently transpires that the core API itself was the element of the solution that was not robust when scaled up, this contractual ‘get of out jail for free’ card might turn out to be of limited value. This is not to mention the extent to which such problems quickly evaporate the good faith the client may have previously had in the competence of the vendor.

There are no shortcuts to Digital Asset Supply Chain scalability problems. Providing they are prepared to comprehensively review the market, however, end-users can still find suppliers who will act reasonably over this issue and are prepared to help their clients to achieve their objectives rather than washing their hands of any problems should they be encountered. Vendors who understand that being at the centre of the client’s Digital Asset Supply Chain (and supportive of their plans to scale it) offers them an exceptional opportunity to develop a long-term relationship which could be highly lucrative and add a lot of value to their own businesses.

This is the primary benefit of having a scalable Digital Asset Supply Chain for both parties: a mutually advantageous relationship which generates a higher ROI for the client and a reliable long-term revenue stream for the vendor as a result of having a very satisfied and loyal customer.

Share this Article: