Google VISION API: Not Yet A Game Changer

Over the last few weeks, there have been a series of press releases from various tech firms, notably Google and Adobe about technologies which claim to automate the image cataloguing process. A few DAM vendors have already integrated the Google Cloud VISION API which is product-agnostic and designed to be a tool for developers. Adobe Smart Tags is discussed in this blog post, I don’t know if that is open for developers of other solutions to use, but I would imagine it is Adobe products only (i.e. their own Experience Manager platform).

I have written about these technologies in the past and overall, my advice remains the same, but there are some developments which make this worth observing. Whether or not they will provide sufficient benefit to be useful is a harder question to answer, a key problem seems to be that the people who have developed them make limited use of the human-generated metadata already available (especially taxonomies).

Much of the recent promotional activity around these technologies uses stock-in-trade tech marketing nonsense phrases like ‘this is a game-changer’, which prompts sceptics like myself to look for evidence of marketing smoke and mirrors (and I usually don’t have to try that hard to find it). Since the Google API is open and a few vendors have already adopted it, you can take it as a given that within a few weeks or months, numerous other firms will be issuing press releases announcing their ‘game changing automated cataloguing features’ and how unique and innovative they are etc.

I decided to investigate the Google API because it is open and relatively easy to get started with. My technical skills are in steep decline these days, but I was generating results within about 30 minutes. Google’s API is divided into multiple recognition methods, which are described in the documentation:

- LABEL_DETECTION – Execute Image Content Analysis on the entire image and return

- TEXT_DETECTION – Perform Optical Character Recognition (OCR) on text within the image

- FACE_DETECTION – Detect faces within the image

- LANDMARK_DETECTION – Detect geographic landmarks within the image

- LOGO_DETECTION – Detect company logos within the image

- SAFE_SEARCH_DETECTION – Determine image safe search properties on the image

- IMAGE_PROPERTIES – Compute a set of properties about the image (such as the image’s dominant colors)

To process images, they need to be converted into Base64 format. For non-technical readers, this means the binary image data is transformed into text so it can be dispatched to their API to be analysed. This should be fairly easy for most moderately skilled developers, but it might be an issue for anyone who has a very large repository of images if they plan to run all of them through the API (in addition to the costs applied by Google which I’ll cover later).

To present this in a more readable format, I have synthesised the results and removed some of the more esoteric output. I need to make it clear in testing this API, I have carried quite a limited study and this is based on quite a cursory inspection (of the type most DAM solution developers and product managers might do prior to deciding whether to use this or not). There may be further features which enhance the results, so I recommend conducting your own analysis and don’t rely solely on mine.

The label and landmark detection options appear to be the most expressive. Each match is allocated a score (which is the level of confidence that the algorithm has about how accurate it is). Some of the numbers offered for these are debatable and a few of the lower score matches are actually better than those it gives a higher weighting to. There is colour detection also, but this returns RGB values which the developer must then convert into named colours. Some examples generated named colour keywords like ‘blue’ etc via the label recognition, but not all of them.

As longer-term readers might recall, I wrote a DAM News feature article a few years back on findability which although certainly not the last word on this topic, offers some advice which (if followed) should help produce some usable results in terms of metadata for image-based digital assets. With that in mind, I tested the same image of Blackfriars Bridge in London on Google’s API along with some other sample photos of Central London shot by my colleague a few years ago.

The matching terms offered were:

bridge, landform, river, vehicle, ocean liner, girder bridge, watercraft

These are not bad, but ‘ocean liner’ gets a higher score than ‘girder bridge’ and I’m not entirely sure about the validity of ‘vehicle’ either. The other matches (including landmark) produced no results.

The matching terms offered:

Blackfriars, transport, architecture, downtown, house, facade, MARY

The landmark was picked up in this case, because this shot is taken on the north bank of The Thames in proximity to Blackfriars station and the Blackfriars Inn landmark, it also offers some longitude and latitude coordinates which are accurate. The ‘MARY’ keyword is from an advert on the side of the bus (via the OCR/text detection). This seems to be one of the best results of the tested sample, although ‘downtown’ is quite a culturally-specific keyword and while this is commonly used in the USA, it isn’t in the UK (and the subject is in the UK, as the algorithm has been able to ascertain).

Terms offered:

Tree, plant, atmospheric phenomenon, winter, landscape, frost, wall

In contrast to the last example, this was the worst one. In fairness, the image of the fountain in Trafalgar Square is rotated on its side, but that isn’t unusual for photos, especially for scanned images. Only the vaguer keywords ‘landscape’ and ‘atmospheric’ are even close to being usable. If a human keyworder had proposed this metadata, I would want to know if perhaps they had been looking at a different image while cataloguing this one.

To give the algo a second chance, I tried rotating the image to the right so the orientation was correct:

Terms offered:

tree, plant, atmospheric phenomenon, winter, landscape, frost, wall

This seems to have made little difference (I re-uploaded this twice to test it was using the new edition). Again, some obvious keywords like ‘fountain’ which a human being would have picked up immediately don’t feature. It looks like images without contrast and/or clearly defined lines and cause problems for the recognition routines, which isn’t entirely unexpected.

Terms offered:

blue, reflection, tower block, architecture, skyscraper, glass, building, office

This is the other example where the results seem reasonable. ‘Tower block’ is possibly questionable, but the terms suggested are fairly good matches. The shot is of the ‘Palestra’ building in Southwark on the south-side of The Thames (formerly occupied by the London Development Agency). I note that Google Maps is aware of what this building is, but the specific location has not be derived to allow it to offer a location.

Terms offered:

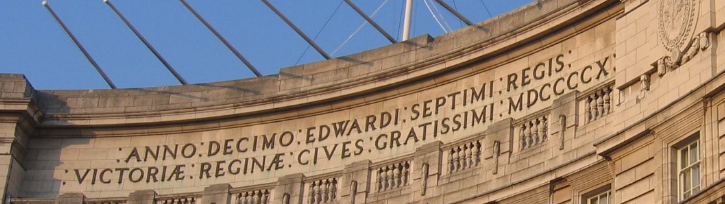

Admiralty Arch, architecture, tourism, building, plaza, cathedral, arch, palace, facade, basilica

The first four or five terms are reasonable, the rest are less successful, although in this case, the higher scoring ones are more appropriate, so a threshold would produce better results (in this case).

The algo has also made an attempt at the Latin text (although it reads like it is about as proficient at that subject as I am).

VICTORIE.REGINE.CI

ANNO:DECIMO

CINE. CIVES CRATISSIMI;MDCCC

CIMO : EDWARDISEPTIMI : REGIS

In terms of keywords, however, these would be usable in a search.

Terms offered:

Trafalgar Square, Big Ben, from Trafalgar Square, blue, monument, sea, statue

This is another mixed bag. The location is Trafalgar Square, but this isn’t Big Ben (which is about half a mile down the road). Most human beings who I have asked to catalogue this image make ‘lion’ their first suggestion, but it’s not even mentioned in this list and apart from the fact that Lord Nelson who stands on top of the column in Trafalgar Square was an admiral, ‘sea’ is a highly tenuous connection, to say the least (and far more of one than ‘statue’ which is ranked lower).

Terms offered:

Trafalgar Square, statue, sky, sculpture, monument, flight, gargoyle

As with other examples, the higher ranked suggestions seem reasonable, but at the lower end, the reliability diminishes and there are some obvious omissions like the stone lion in the foreground (and I can’t see many gargoyles). I suspect the colour has confused the algorithm here because the shape and colour are not pointing to the same match (live lions not usually being black in colour).

Terms offered:

Big Ben, vehicle, jet aircraft

This is one of the easier ones and it has correctly picked out the landmark location, even if the ‘jet aircraft’ isn’t much in evidence in this shot. I would have expected some of the geographic details (i.e. ‘London’ etc) to have been picked out here. The longitude and latitude coordinates are provided which means these can be extracted, but there’s more work for the developer and potential issues from unconventional ones being used.

The photos used in this test are all quite easy examples, they are the sort of tourist snaps of the type that often get sold in microstock photo libraries. Specialist DAM repositories for corporate and public sector organisations usually contain some more challenging subjects. I did try a few examples from some clients I work with and the results were not brilliant, the top keyword was generally acceptable (but not always) and the other terms were often unsuitable. Based on my tests, the Google VISION API still isn’t able to do the job of a human cataloguer, especially if it is of critical importance that the cataloguing is sufficient to allow the photos to be found.

In the findability article I mentioned earlier, one issue that often occurs with corporate libraries is the lack of literal descriptions because those cataloguing them tend to focus on something specific like a product or project name. In that sense, this might be able to help, however, it would be fairly risky to insert these keywords automatically without the user being able to choose which ones to use, as suggestions they could have some potential benefit. A further issue with even allowing that alone though is that there will be many users who (being pushed for free time) will just approve all the automated suggestions anyway so they can get this pesky digital asset cataloguing task out of the way. The result is going to be poorly catalogued digital assets entering the digital supply chain and not being found later on when they really need to be. This can have wider ramifications than them simply not being used. If at a future date some other management decisions or processes are introduced based on the catalogued data, this could start to have a negative impact. For example, does the following kind of dialogue sound implausible?

“We need to find all images with fountains to make sure there aren’t any that show out of date health and safety practices so we don’t use them in marketing materials, let’s do a search for anything with fountains as a keyword.”

“According to the DAM, we don’t have any images with fountains, so we don’t need to worry about checking which ones are not compliant.”

Lots of people will assume that this scenario won’t happen to them, but in a larger organisation where there are multiple decision makers that are not always in communication with each other, this is a tangible risk of relying on this sort of technology which you need to be aware of before you implement it. Those chip away at the ROI case for using this technology and make it more marginal than it first appears.

One other issue to bear in mind with this API is that it isn’t free beyond the first thousand images. There is a variable pricing depending on how many you need to process, but between 1,000 and one million assets, the cost is $20 per 1,000 if you use all the API options. The ‘label’ detection (which is the most useful) costs $5 per thousand. These prices are relatively cheap, compared with hiring someone else to do the cataloguing for you, but the quality will be a lot lower and as I have demonstrated, it’s not really a proper substitute.

Apart from the reliability of this technology, the other issue is that it’s a black box and although the API is open, you can’t see what it’s doing once it gets your images. Based on the different ‘features’ I believe this is using different strategies to do the pattern recognition. In the past when discussing this topic, I have noted that when the task is focussed on a given area (e.g. facial recognition, OCR etc) then the suggestions tend to be better because a more specialised algorithm can be developed. I believe better results could be obtained if it were possible to define your own features based on specific subject types, for example, if your library is mainly about buildings then a set of rules get developed around that. This is one of the reasons why this sort of solution would benefit from being open source because you need to be able to see what it is doing and then either adapt it or develop a plug-in, extension etc that will address the current limitations. In addition to extensions, it doesn’t appear that the Google API can leverage any existing taxonomy which an organisation has already designed, as such, they are effectively ignoring a significant source of knowledge and associated data which might substantially improve the results. Finally, perhaps I missed it, but I couldn’t find a way to grade the suggestions and issue API requests back to help Google optimise them further (if anyone knows how to do this, please feel free to comment).

My conclusion with this is that it’s still in ‘interesting but not ready yet’ category and I don’t believe the firms mentioned in this article have yet made a big enough leap forward in either their understanding nor architecture for it to be the ‘game changer’ that it is billed to be. As with most software implementations, the first 80-90% of the work is usually easier than everyone expected, it’s the last 10-20% which always proves to be the most difficult and this is particularly true in the case of this problem domain.

Share this Article:

We recently completed a project to investigate whether the available visual recognition APIs can add (enough) value to digital asset management to be useful to our clients. As part of this we wanted to find out whether the release of Google Cloud Vision has introduced a step change in auto-tagging. We came to the same conclusion as you (it hasn’t – yet).

For some of our clients, for example those with a large volume of images showing generic subjects such as outdoor scenes, many of the auto-suggested tags were mostly accurate and could be added to an asset’s metadata directly. For most others, in particular those with domain-specific subjects such as product shots, the tags themselves were usually wrong and did not add much value on their own. One angle we explored successfully was combining auto-suggested tags with human oversight, to see if the auto-tags could make it quicker for a human to apply tags to images, for example by grouping similar images together so that they can be tagged en masse.

I think the visual recognition API vendors are missing a trick by not providing effective feedback loops, to enable their systems to learn from a client’s domain-specific images (and to prioritise that domain over the generic subjects). Most DAM applications are full of images and high-quality, human-entered metadata – perfect learning opportunities. We are currently looking at how we can improve the suggested tags by mapping tags coming from the APIs onto the human-entered tags seen in the images already in the DAM system (using probability mappings) – but this is really something the APIs should enable themselves.

I’d be happy to share more details of what we did, and our conclusions. I wrote a blog article about the project: https://www.assetbank.co.uk/blog/ai-in-dam-is-it-ready-yet/

Martin, I saw your article a few days ago (after I’d written mine). It’s a good item you’ve put together on this and I’m not surprised you’ve reached similar conclusions to myself. I think you’re right about the feedback loop and being able to make use of human generated metadata, it seems to be the best approach for improving the results.

Hei

I find the tests you both have done very interesting because you focus on the value of utilizing human generated metadata with computer vision.

I do not know if you’ve heard about the photo agency conference Cepic held every year. A key theme this year is “The Future of Keywording: Auto-tagging will replace manual metadata”.

http://cepic.org/congress/programme.

I think that you Ralph would be an interesting participant in that panel discussion.

Solveig Vikene